You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

insufficient system resources exist to complete the requested service

- Thread starter fermulator

- Start date

- Joined

- 5 Dec 2001

- Messages

- 6,498

An explanation about the 4 GB's of ram from Microsoft:

http://blogs.msdn.com/oldnewthing/archive/2006/08/14/699521.aspx

Here are the memory limits for Windows Vista on 32 bit and 64 bit:

http://msdn2.microsoft.com/en-us/library/aa366778.aspx

8TB is only allowed with IMAGE_FILE_LARGE_ADDRESS_AWARE. (Application has to be compiled with 64 bit support, Visual C++ does not do this, and as such most applications are 32 bit, and thus will not be able to make use of the 8TB limit that might be available)

PAE to enable access to more than 4 GB of ram on Windows on 32 bit systems:

http://msdn2.microsoft.com/en-us/library/aa366796.aspx

How PAE works:

http://technet2.microsoft.com/windo...7a42-40f2-8a01-8de61dccd8c91033.mspx?mfr=true

http://blogs.msdn.com/oldnewthing/archive/2006/08/14/699521.aspx

Here are the memory limits for Windows Vista on 32 bit and 64 bit:

http://msdn2.microsoft.com/en-us/library/aa366778.aspx

8TB is only allowed with IMAGE_FILE_LARGE_ADDRESS_AWARE. (Application has to be compiled with 64 bit support, Visual C++ does not do this, and as such most applications are 32 bit, and thus will not be able to make use of the 8TB limit that might be available)

PAE to enable access to more than 4 GB of ram on Windows on 32 bit systems:

http://msdn2.microsoft.com/en-us/library/aa366796.aspx

How PAE works:

http://technet2.microsoft.com/windo...7a42-40f2-8a01-8de61dccd8c91033.mspx?mfr=true

- Joined

- 24 Jan 2002

- Messages

- 12,388

here ya go

http://www.multicians.org/multics-vm.html

The file is hosted on a read-only share, so time-stamps don't matter.

it doesn't matter how the file is hosted it matters how YOUR computer is processing the file, your computer can be adding time stamps to the data that's on your box, that new data needs an image and it obviously doesn't exist on the original file, that is the very point, the original file cannot host the new data and THAT'S what the pagefile is for, data that has turned dirty locally

it doesn't matter what the file does on the network, what matters is if it's dirty on your box...if your computer changes the file on your box it needs an image for memory reclamation since the original file does not have that data

An explanation about the 4 GB's of ram from Microsoft:

http://blogs.msdn.com/oldnewthing/archive/2006/08/14/699521.aspx

I know all about the limitations in 32 bit and how pae allows 64 bit extension, this article does not address 64 bit, I also know what I need to know regarding physical address extension regarding this discusion, I know there are driver issues and I know microsoft wrote in a fix, and we both think this user is not having an issue that pae will resolve, I think that pae is always on since sp2 regardless if he has dep enabled, if not maybe he should give pae a shot

Here are the memory limits for Windows Vista on 32 bit and 64 bit:

http://msdn2.microsoft.com/en-us/li...7a42-40f2-8a01-8de61dccd8c91033.mspx?mfr=true

I think this page was written before sp2, not sure, do you know the date it was authored?

Last edited:

- Joined

- 5 Dec 2001

- Messages

- 6,498

Thank you. Will read it over.

- Joined

- 5 Dec 2001

- Messages

- 6,498

User-mode virtual address space for each 64-bit process

32-bit:

Not applicable

64-bit:

2 GB

x64: 8 TB with IMAGE_FILE_LARGE_ADDRESS_AWARE

Intel IPF: 7 TB with IMAGE_FILE_LARGE_ADDRESS_AWARE

See the table at that link. User-mode Virtual Address Space (Or also known as, how much ram any one process can take up at a time).

- Joined

- 24 Jan 2002

- Messages

- 12,388

See the table at that link. User-mode Virtual Address Space (Or also known as, how much ram any one process can take up at a time).

ya, I read that x and I could be reading that wrong but I think the 2 gig threshold that table is showing the two gig threshold for processes that are not configured for 64 bit os, notice;

x64: 8 TB with IMAGE_FILE_LARGE_ADDRESS_AWARE

8tb is the mathematical limit of 64 bits as I noted in a post above

I would think accessing files gives the large address aware threshold since accessing data is an os not an app, it's not a processs that is restricted due to 32 bit configuration, I don't think there's gonna be any restriction for accessing files, don't know as a fact though

having fun with this discussion but have a 3 hour drive ahead of me

enjoy weekend xistance

Last edited:

- Joined

- 5 Dec 2001

- Messages

- 6,498

8 TB is not the mathematical limit:

(2^64) bits = 2 exabytes

However, physical addressing limitations on all the 64 bit processors are 48 bits. Read the pagetable blog (http://www.pagetable.com/?p=29) entry I posted:

(2^48) bits = 32 terabytes

So 8 terabytes is a limit set by Windows.

Like I said about the IMAGE_FILE_LARGE_ADDRESS_AWARE, this is an option that has to be enabled during compilation. Sure Microsoft's products would have it enabled, but what about other peoples products.

I have read the paper about Multics, and what you are saying is not the same as what they are saying.

When they talk about mapping it to a hard drive, they mean executable code. They are not talking about user generated variables that are filled with whatever they want, they are not talking about actually reading a file from disk into memory.

So if I have a program that is 15 megabytes, it would only load the first megabyte, and then when required it will page fault, and load the require data from the file on disk. Same thing happens with DLL's, however they are shared across processes, so if it already exists within memory, just map it to that one, or page fault and fetch it from disk.

Any memory the user creates on the heap/stack (mine is on the heap, when I go grab a 1.4 Gb file from a network share) will be stored in the pagefile if required, especially if it overflows main physical RAM.

I have reduced the file size to 1 GB. That will come in just under 1.9 GB of stuff in memory (had I used C instead of C++ I would not have had as much overhead). Do note, most programmers use C# these days, so the overhead I am seeing with C++ is almost nothing compared to what they would see.

Once the file is loaded into memory, I will remove the network cable from the machine, so that now the only way to get the data is by actually reading it from the pagefile/memory. If what you say is correct this will fail.

Do note, during these tests nothing is ever changed on this file. The same results have been achieved using a file that is sitting on a remote server, as well as on a local server. Even the one on the local disk still swaps what is in memory out.

Notepad for example edits files on the local disk. One would assume then if it was cheap to just allocate memory and let it be mapped back to a file that it could open files over 1 GB without any issues, but Notepad does exactly what any inefficient program would do, and that is copy the entire file into memory. (Try it, load up a 100 MB file into notepad, notice how Notepad now takes up a 100 MB of memory?). Even if you are not planning to edit the file, Notepad will still take up that much space in memory. Since it has not been touched, there has been no reason to mark the page dirty, so it should just map it back to the file, and when the system runs out of actual ram, it will only have to put a small piece of code to ram to let the system know where it is supposed to map it.

I am sorry Perris, but what you have so far been saying is correct in a sense. It is only correct for executable code, as I said with my 15 MB binary example. It is not correct for any other case. Notepad shows just that. It is a naive application and copies everything into RAM. Which is why it is not possible to open big files with Notepad.

Programmers have been using tricks for years now to only have to parse a subset of a certain amount of data because of this very reason. There is no way it will all fit into memory. When you load up a 50 MB Word doc with images and text, it will only load the page you are currently viewing, generate the "viewable" image, and then throw that out of memory, and load the next page. That way it won't ever have the full 50 MB worth of document in memory as that is inefficient.

Here we go, found the error number on a thread:

http://forums.microsoft.com/WindowsHomeServer/ShowPost.aspx?PostID=1444560&SiteID=50

So this is definitely a Windows Vista issues that the original poster was having. All he can hope for is to have Microsoft get their act together and fix this. As it is definitely a system bug, and one that will not be fixed by switching to 64 bit.

(2^64) bits = 2 exabytes

However, physical addressing limitations on all the 64 bit processors are 48 bits. Read the pagetable blog (http://www.pagetable.com/?p=29) entry I posted:

(2^48) bits = 32 terabytes

So 8 terabytes is a limit set by Windows.

Like I said about the IMAGE_FILE_LARGE_ADDRESS_AWARE, this is an option that has to be enabled during compilation. Sure Microsoft's products would have it enabled, but what about other peoples products.

I have read the paper about Multics, and what you are saying is not the same as what they are saying.

When they talk about mapping it to a hard drive, they mean executable code. They are not talking about user generated variables that are filled with whatever they want, they are not talking about actually reading a file from disk into memory.

So if I have a program that is 15 megabytes, it would only load the first megabyte, and then when required it will page fault, and load the require data from the file on disk. Same thing happens with DLL's, however they are shared across processes, so if it already exists within memory, just map it to that one, or page fault and fetch it from disk.

Any memory the user creates on the heap/stack (mine is on the heap, when I go grab a 1.4 Gb file from a network share) will be stored in the pagefile if required, especially if it overflows main physical RAM.

I have reduced the file size to 1 GB. That will come in just under 1.9 GB of stuff in memory (had I used C instead of C++ I would not have had as much overhead). Do note, most programmers use C# these days, so the overhead I am seeing with C++ is almost nothing compared to what they would see.

Once the file is loaded into memory, I will remove the network cable from the machine, so that now the only way to get the data is by actually reading it from the pagefile/memory. If what you say is correct this will fail.

Do note, during these tests nothing is ever changed on this file. The same results have been achieved using a file that is sitting on a remote server, as well as on a local server. Even the one on the local disk still swaps what is in memory out.

Notepad for example edits files on the local disk. One would assume then if it was cheap to just allocate memory and let it be mapped back to a file that it could open files over 1 GB without any issues, but Notepad does exactly what any inefficient program would do, and that is copy the entire file into memory. (Try it, load up a 100 MB file into notepad, notice how Notepad now takes up a 100 MB of memory?). Even if you are not planning to edit the file, Notepad will still take up that much space in memory. Since it has not been touched, there has been no reason to mark the page dirty, so it should just map it back to the file, and when the system runs out of actual ram, it will only have to put a small piece of code to ram to let the system know where it is supposed to map it.

I am sorry Perris, but what you have so far been saying is correct in a sense. It is only correct for executable code, as I said with my 15 MB binary example. It is not correct for any other case. Notepad shows just that. It is a naive application and copies everything into RAM. Which is why it is not possible to open big files with Notepad.

Programmers have been using tricks for years now to only have to parse a subset of a certain amount of data because of this very reason. There is no way it will all fit into memory. When you load up a 50 MB Word doc with images and text, it will only load the page you are currently viewing, generate the "viewable" image, and then throw that out of memory, and load the next page. That way it won't ever have the full 50 MB worth of document in memory as that is inefficient.

Here we go, found the error number on a thread:

http://forums.microsoft.com/WindowsHomeServer/ShowPost.aspx?PostID=1444560&SiteID=50

So this is definitely a Windows Vista issues that the original poster was having. All he can hope for is to have Microsoft get their act together and fix this. As it is definitely a system bug, and one that will not be fixed by switching to 64 bit.

- Joined

- 5 Dec 2001

- Messages

- 6,498

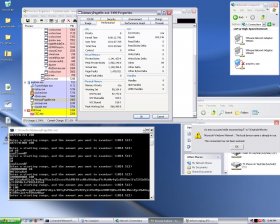

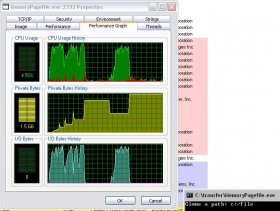

on-local-disk.jpg shows how much RAM it is taking up, when loaded from the local disk where it can read/write time-stamp to it's hearts contends. Meaning if what you were saying were true, it would not have gone to pagefile, or at least it would not have taken up that much ram so quickly. My program is rather straight forward (check it for yourself, come up with a counter example that shows the behaviour you are saying exists).

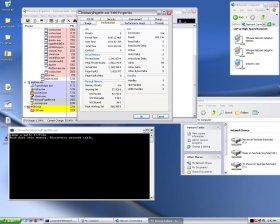

The other two show the 1 GB file loaded into memory with overhead. I then unplug the network cable (check that the mount point F is now offline), I then feed my program some numbers to ask it to print out starting from that offset and a length.

0 100 means start at the 0th offset in the file, and read a 100 characters and print them out.

10 10 means start at the 10th offset in the file, and read 10 characters.

I picked a few random ones that were in the middle of the data set, on the outside and the inside. Without a network connection, I could have written that file I held in memory onto local disk without loss of data, which is what would have happened if the file had indeed been mapped to the remote location as you had said.

The other two show the 1 GB file loaded into memory with overhead. I then unplug the network cable (check that the mount point F is now offline), I then feed my program some numbers to ask it to print out starting from that offset and a length.

0 100 means start at the 0th offset in the file, and read a 100 characters and print them out.

10 10 means start at the 10th offset in the file, and read 10 characters.

I picked a few random ones that were in the middle of the data set, on the outside and the inside. Without a network connection, I could have written that file I held in memory onto local disk without loss of data, which is what would have happened if the file had indeed been mapped to the remote location as you had said.

Attachments

- Joined

- 24 Jan 2002

- Messages

- 12,388

I have no examples, only theory and what landy's instructionon-local-disk.jpg shows how much RAM it is taking up, when loaded from the local disk where it can read/write time-stamp to it's hearts contends. Meaning if what you were saying were true, it would not have gone to pagefile, or at least it would not have taken up that much ram so quickly. My program is rather straight forward (check it for yourself, come up with a counter example that shows the behaviour you are saying exists).

The other two show the 1 GB file loaded into memory with overhead. I then unplug the network cable (check that the mount point F is now offline), I then feed my program some numbers to ask it to print out starting from that offset and a length.

0 100 means start at the 0th offset in the file, and read a 100 characters and print them out.

10 10 means start at the 10th offset in the file, and read 10 characters.

I picked a few random ones that were in the middle of the data set, on the outside and the inside. Without a network connection, I could have written that file I held in memory onto local disk without loss of data, which is what would have happened if the file had indeed been mapped to the remote location as you had said.

as far as "going to the pagefile" hardly anything "goes to the pagefile" in the first place, new data that has no image gets mapped to the pagefile for the event of a swap, the os also prepares for swaps that may or may not happen and that's what most people are looking at when they see "pagefile useage"

the swap doesn't happen unless your memory goes under pressure, but events are anticipated and that's almost definately what you are looking at

in addition, in most cases the write doesn't happen either, it's just mapped, there's very little actually written to the pagefile even when it shows an abundant useage

when you say the one gig file is loaded into memory, what do you mean?

did you just open it or did you work on it as well, did you copy it from the network?...was it written to temporary files in order to play?...is another process accessing the file and creating a conflict?

all are factors

from my conversation with landy;

copy on write pages are written to the paging file; unmodified

image pages are thrown away and just re-read if needed. ie, image files are

never written to (unless they are recompiled/explicitly copied, etc) - they

are just read from.

and he made it clear this is not just applications or executeables

items are proactively written to the pagefile even before memory is claimned but your memory is going to be used as much as possible and remain in memory as long as possible

save the following as a vb script;

Code:

' WinXP-2K_PageFile.vbs - Checks the current and peak usage, and allocated

' size of the Windows Windows XP or Windows 2000 pagefile and optionally

' log and/or show the results in a popup.

' © Bill James - wgjames@mvps.org - Created 4 Nov 2002 - Revised 10 Nov 2002

' Please see the ReadMe.txt file for additional information.

' You can run this script manually during a Windows session, or if you have

' Windows XP/2000 Pro I recommend adding it as a logoff script to log results

' at the end of each Windows session. To implement running the script at

' logoff (also shutdown and restart) for Windows XP Pro or Windows 2000 Pro:

' Click Start, Run, gpedit.msc. Select User Configuration, Windows Settings,

' Scripts. In the right pane, select Logoff, Properties. Click Add, Browse.

' Browse to the location of this script and select it. IMPORTANT: In the

' "Script Parameters" box add "log" (no quotes) so the script will not show a

' popup which would stall your shutdown sequence. OK, Apply, OK. (You may

' want to first copy this script to the logoff folder and select it there)

' If logging is enabled (default), the results are saved in My Documents folder

' as PageFileLog.txt.

'**********************************************************

' Three optional settings are configurable below:

' WriteToFile - If set to True the information will be added to a log file in

' your 'My Documents' folder. Of course, you want this if you are running

' at logoff, but you might not want it for manually checks. Changing this

' to False disables logging.

' ShowPopup - If set to True then after the script runs a message box is

' presented with the results. This might not be desirable when

' automatically running the script at logoff. False disables popup.

' DisplaySeconds - The number of seconds that the results popup will

' display. Setting this to 0 (zero) will cause the popup to remain until

' acknowledged.

WriteToFile = true 'Options: True, False

ShowPopup = True 'Options: True, False

DisplaySeconds = 3 '0 (zero) to force OK

'**********************************************************

'**********************************************************

' You can also set the options using arguments:

' Syntax: [path]scriptname [log] [rpt] [t:sec]

' log - add results to the logfile

' rpt - show results in popup

' t:seconds - controls how long the popup message will display

' Example: "WinXP-2K_PageFile.vbs rpt t:5" - show popup for 5 seconds, no log.

' Example: "WinXP-2K_PageFile.vbs log" - log the results, no popup.

' Example: "WinXP-2K_PageFile.vbs log rpt t:10" - log and 10 second popup.

' NOTE: If ANY arguments are used, all hardcoded variables are set to

' false or 0, so you must specifically set which options you want.

' To use these options, create a shortcut to the script and add the arguments

' there, or the arguments can be used running the script from command line.

'**********************************************************

' Do not edit below this line

If WScript.Arguments.Count > 0 Then

WriteToFile = False

ShowPopup = False

DisplaySeconds = 0

For Each arg in WScript.Arguments

If LCase(arg) = "log" Then

WriteToFile = True

End If

If LCase(arg) = "rpt" Then

ShowPopup = True

End If

If Left(LCase(arg), 2) = "t:" Then

If IsNumeric(Mid(arg, 3)) Then

DisplaySeconds = Mid(arg, 3)

End If

End If

Next

End If

For Each obj in GetObject("winmgmts:\\.\root\cimv2").ExecQuery(_

"Select Name, CurrentUsage, PeakUsage, " & _

"AllocatedBaseSize from Win32_PageFileUsage",,48)

s = s & vbcrlf & "Pagefile Physical Location: " & vbtab & obj.Name

s = s & vbcrlf & "Current Pagefile Usage: " & vbtab & obj.CurrentUsage & " MB"

s = s & vbcrlf & "Session Peak Usage: " & vbtab & obj.PeakUsage & " MB"

s = s & vbcrlf & "Current Pagefile Size: " & vbtab & obj.AllocatedBaseSize & " MB"

Next

If WriteToFile Then

Set fso = CreateObject("Scripting.FileSystemObject")

logfile = CreateObject("WScript.Shell"). _

SpecialFolders("MyDocuments") & "\PagefileLog.txt"

If NOT fso.OpenTextFile(logfile, 1, True).AtEndOfStream Then

With fso.OpenTextFile(logfile, 1)

s2 = .ReadAll : .Close

End With

End If

With fso.OpenTextFile(logfile, 2)

.Write Now() & vbcrlf & s & vbcrlf & vbcrlf & s2 : .Close

End With

End If

If ShowPopup Then

WScript.CreateObject("WScript.Shell").Popup _

s, DisplaySeconds, "WinXP Pagefile Usage Monitor by Bill James", 4096

End If

' Revison History

' 9 Nov 2002 - Various prerelease changes

' 10 Nov 2002 - Tweaked to show stats where multiple drives have a pagefilewhen you click on that it will tell you exactly how much is actually written to the pagefile, you'll see hardly anything, pagefile useage is simply mapped and much of that is mapped in anticipation of new data not because new data exists

Last edited:

- Joined

- 5 Dec 2001

- Messages

- 6,498

Read the code. I open the file. Copy it into memory (which according to what you have said so far would mean the file would get "mapped", and not until I start making changes would it really get written in memory).

The file is never written to a temporary file, as can be seen by the code above. It get's copied into a temporary variable (should be "mapped") and then gets appended to the large variable (should still be "mapped"). No other process is accessing the file, so no conflict.

Sure, items are proactively written to the pagefile, but in that machine I only have 512 MB of ram. And as soon as I go over 400 MB used, I can hear the pressure go up, explorer.exe gets swapped out, so the next time I click on it, it takes a good 10 seconds to pop back up and be active in such a way that I can use it. Opening new apps takes ages as it has to swap out more of my 1 GB file that I have loaded into memory.

Key quote from the Multics paper:

In this case they mean that the actual executable is larger than the available memory, or the CPU's own on-board "memory" (the name escapes me right now). The way Multics implemented it, is that as soon as code is called that does not exist in page yet, a page fault is generated, the code is retrieved from the disk, and then stored in a page. Then the CPU can keep executing the code.

The file is never written to a temporary file, as can be seen by the code above. It get's copied into a temporary variable (should be "mapped") and then gets appended to the large variable (should still be "mapped"). No other process is accessing the file, so no conflict.

Sure, items are proactively written to the pagefile, but in that machine I only have 512 MB of ram. And as soon as I go over 400 MB used, I can hear the pressure go up, explorer.exe gets swapped out, so the next time I click on it, it takes a good 10 seconds to pop back up and be active in such a way that I can use it. Opening new apps takes ages as it has to swap out more of my 1 GB file that I have loaded into memory.

Key quote from the Multics paper:

In the past few years several well-known systems have implemented large virtual memories which permit the execution of programs exceeding the size of available core memory.

In this case they mean that the actual executable is larger than the available memory, or the CPU's own on-board "memory" (the name escapes me right now). The way Multics implemented it, is that as soon as code is called that does not exist in page yet, a page fault is generated, the code is retrieved from the disk, and then stored in a page. Then the CPU can keep executing the code.

Affiliates

Latest profile posts

Impressed you have kept this alive this long EP! So many sites have come and gone.

Just did some crude math and I apparently joined almost 18yrs ago, how is that possible???

Just did some crude math and I apparently joined almost 18yrs ago, how is that possible???

Rest in peace my friend, been trying to find you and finally did in the worst way imaginable.